Escaping Carrier-Grade NAT with Wireguard and a VPS

Table of Contents

When you’re stuck with excellent, symmetric 1Gbps internet over CGNAT, only to find out that a static IP costs an extra £8/month, the logical option is to find a way around it… right? At least that’s the conclusion I came to, and it all led me down a rabbit hole into the world of networking. Despite my reluctance to add yet another subscription to the ever-growing list, I eventually decided to tackle CGNAT head-on.

With CGNAT (Carrier-Grade NAT), your internet connection doesn’t have a public IPv4 address. Instead, it shares an IPv4 address with multiple other users on the same network. This means you can’t create a CNAME record in your DNS provider pointing to an IPv4 address, since you don’t have one to route traffic to your local server.

When designing this setup to get over this constraint, I had several key requirements:

- SSL termination should occur on my local server.

- Traefik should be able to read the original request IP to properly pass it to Crowdsec.

- The resulting bandwidth through the VPS and local server should not be less than the maximum available on my local network.

- Cloudflare should still be able to proxy the VPS’s IPv4 address where possible.

To meet these goals, I used the VPS to forward incoming HTTPS traffic to my local server, with SSL termination happening on Traefik. This narrowed down my options for forward proxies on the VPS, as I needed TCP forwarding. To meet this requirement, I chose HAProxy and Traefik, both of which support ProxyProtocol to communicate with each other.

Requirements

To get this working, you will need the following:

- A local server behind the CGNAT, where you can install Wireguard. For me, this is a Dell Optiplex running Ubuntu 24.04 in Proxmox, but even a Raspberry Pi will work.

- A VPS with a public IPv4 address. I’ve opted to use an Oracle Cloud Flex A1 instance with 1CPU and 6GB RAM as this provides 1Gbps bandwidth which is the speed of my home network.

Cloud VPS Initial Setup

Check your cloud provider’s documentation to set up your server. At a minimum, you’ll want to do the following to protect your VP security:

- Set up a sudo user.

- Disable root login (set

PermitRootLogin noin/etc/ssh/sshd_config). - Update the OS:

sudo apt update && sudo apt upgrade.

Additionally, it’s recommended to:

- Set up key-based authentication and disable password authentication.

- Install fail2ban, to block IP addresses that fail login attempts:

$ sudo apt install fail2ban

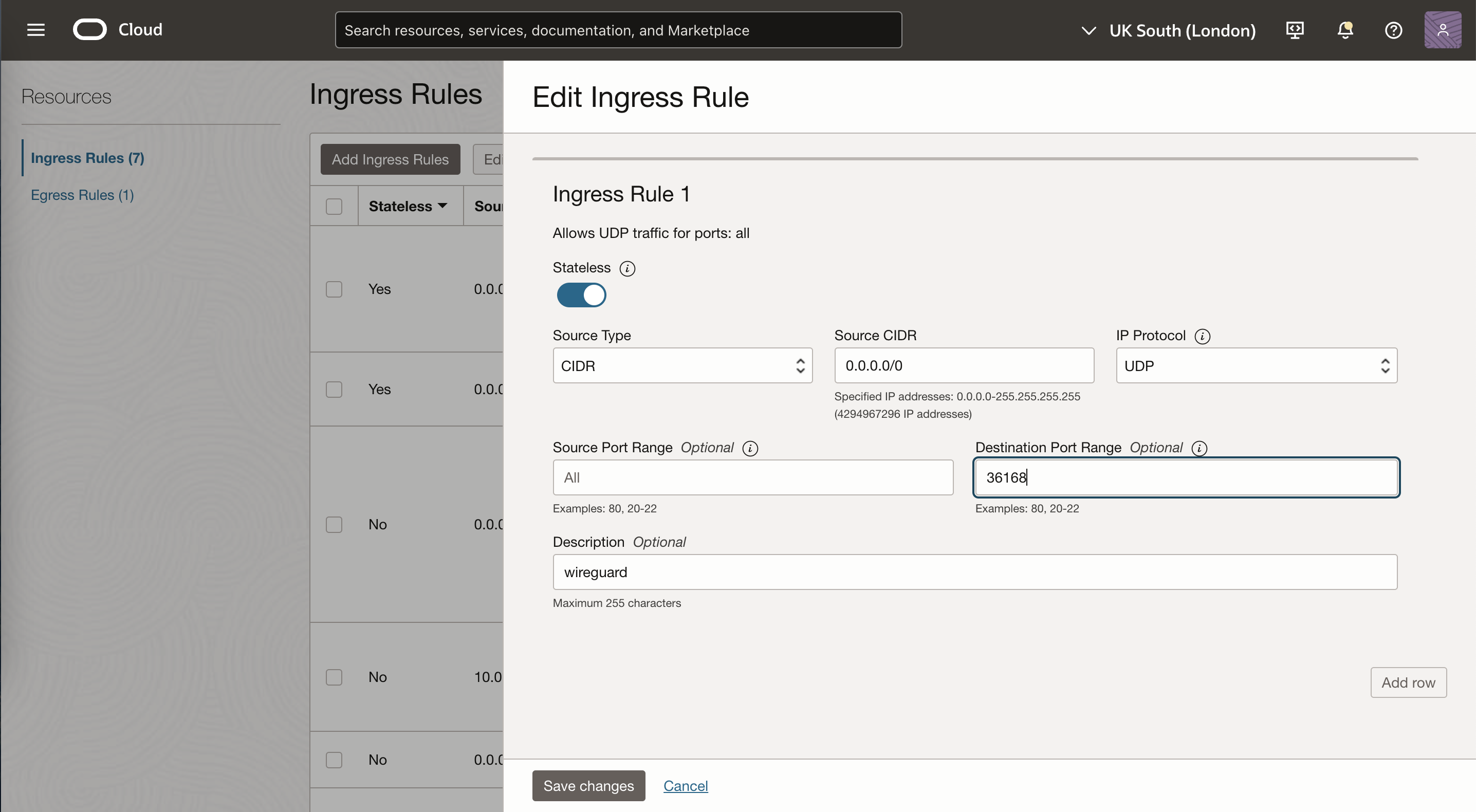

Next, we will want to allow inbound and outbound connections on ports 80, 443 and a chosen port for Wireguard, which I chose as 36168, however you can choose any valid port. On your VPS provider, edit your VNIC to allow 36168/UDP traffic from any source, in Oracle this is done at the Subnet and adding a stateless Ingress rule:

Create two more Ingress rules for HTTP(80/TCP) and HTTPS(443/TCP) that are not stateless. This will allow inbound web traffic to the VPS.

NOTE: On the Oracle Ubuntu 24.04 image,

ufwis not installed by default as the firewall is configured at the VNIC, but on your instance it might be. If this is the case, make sure to add the following rules:$ sudo ufw allow 443/TCP $ sudo ufw allow 80/TCP $ sudo ufw allow 36168/TCP

Wireguard Setup

To install WireGuard on Ubuntu 24.04, run the following on both systems:

$ sudo apt install wireguard

follow these instructions for other operating systems. Once Wireguard is installed, we need to generate public and private keys for authentication on both systems.

Generate Authentication Keys

On each server run the following to generate private and public keys:

$ sudo -i

$ cd /etc/wireguard

$ umask 077

$ wg genkey | tee privatekey | wg pubkey > publickey

Now the private and public keys will be in /etc/wireguard/privatekey and /etc/wireguard/publickey, respectively.

We also want to generate a PreSharedKey and can use wg genpsk, make a note of this. This is optional, but adds an additional layer of symmetric-key cryptography.

Next, we will set up the wg0 interface at /etc/wireguard/wg0.conf. For both, substitute in your values in angled brackets.

Local Server Configuration

On the Wireguard VPN, we will assign the local server the IP 192.168.5.2 and the DNS server to be the VPS. If this clashes with any of your networks, change the domain otherwise you will encounter issues.

[Interface]

PrivateKey = <Local PrivateKey>

Address = 192.168.5.2/24

DNS = 192.168.5.1

[Peer]

PublicKey = <VPS PublicKey>

PresharedKey = <PreShared Key>

Endpoint = <VPS Public IPv4>:<Wireguard Port>

AllowedIPs = 192.168.5.1/24, ::0/0

VPS Configuration

Similar to the local server, however we will give it the address of 192.168.5.1. Note that we are setting the MTU to be 1500 which is the default of most home networks.

[Interface]

PrivateKey = <VPS PrivateKey>

Address = 192.168.5.1/24

MTU = 1500

ListenPort = <Wireguard Port>

[Peer]

PublicKey = <Local PublicKey>

PresharedKey = <PreShared Key>

AllowedIPs = 192.168.5.2/32, 192.168.0.0/32, 10.0.0.0/24

Testing the Connection

Now that we have completed the configuration on both the local server and VPS, we can run sudo wg-quick up wg0 on both machines to establish the wg0 Wireguard interface.

To test that both clients can talk to one another and have established the tunnel you can execute sudo wg from both the local and your VPS, which will show a successful handshake and some kilobytes of data transferred. For example, running the command on the VPS:

ubuntu@production:~$ sudo wg

interface: wg0

public key: <client public key>

private key: (hidden)

listening port: <Wireguard Port>

peer: <peer id>

preshared key: (hidden)

endpoint: <server-ip>:<server port>

allowed ips: 10.101.102.2/32, 192.168.0.0/32, 10.0.0.0/24

latest handshake: 1 minute, 11 seconds ago

transfer: 16.88 GiB received, 552.44 MiB sent

This can be further verified by sending a ping from one to the other using their Wireguard subnet addresses.

TCP Forwarding from VPS to Local Server with HAProxy

Now that the WireGuard tunnel is established, we need to forward incoming traffic from the VPS on port 443 to the local server over the WireGuard connection. Since SSL termination occurs on the local server, we’ll use TCP forwarding via the ProxyProtocol from HAProxy to Traefik. This ensures that the client’s IP is preserved, which is important for tools like Crowdsec.

Configure Traefik to Accept ProxyProtocol

In the static traefik.yml configuration, on both your HTTPS and HTTP entrypoints, you need to allow ProxyProtocol from IP addresses on the subnet of your wireguard tunnel. My Wireguard is configured to use the 192.168.5.0/24 subnet so requires the following:

entryPoints:

http-external:

address: ":81"

http:

redirections:

entryPoint:

to: https-external

scheme: https

proxyProtocol:

trustedIPs:

- 192.168.5.0/24

https-external:

address: ":444"

proxyProtocol:

trustedIPs:

- 192.168.5.0/24

Adding this configuration tells Traefik that it should allow ProxyProtocol connections from the VPS and parse the headers on requests accordingly. This is important as by using ProxyProtocol, we are rewriting the host headers to preserve the request ClientIP.

Configure HAProxy to Forward Connections

To forward incoming traffic onto the public VPS IP to the local server as if it were coming in to it directly, HAProxy will use the Traefik ProxyProtocol to forward incoming traffic to Traefik. Install the latest version of HAProxy on the VPS:

$ sudo apt install haproxy

as of writing, the latest version was 2.8.5-1ubuntu3.2.

Now we have it installed, we can need to update the configuration to forward traffic from port 443 to port 444 at our local server. Update the configuration at /etc/haproxy/haproxy.cfg as follows:

global

stats socket /var/run/api.sock user haproxy group haproxy mode 660 level admin expose-fd listeners

log stdout format raw local0 info

defaults

log global

mode tcp

option http-server-close

timeout client 10s

timeout connect 5s

timeout server 10s

frontend https-frontend

bind *:443

option tcplog

default_backend homebackend

backend homebackend

balance roundrobin

server localserver 192.168.5.2:444 check send-proxy-v2

Does It Work??

To test that connections were being properly fully routed from the VPS to my local server, create an NGINX container on your local server at test.domain.com and add an A record to Cloudflare pointing at the VPS public IP:

NGINX Docker Compose

services:

nginx-test:

image: nginx:latest

hostname: nginx-text

restart: unless-stopped

networks:

- traefik

labels:

- "traefik.enable=true"

- "traefik.docker.network=traefik"

- "traefik.http.routers.test.entrypoints=http-external"

- "traefik.http.routers.test.rule=Host(`test.domain.com`)"

- "traefik.http.middlewares.test-https-redirect.redirectscheme.scheme=https"

- "traefik.http.routers.test.middlewares=test-https-redirect"

- "traefik.http.routers.test-secure.entrypoints=https-external"

- "traefik.http.routers.test-secure.rule=Host(`test.domain.com`)"

- "traefik.http.routers.test-secure.tls=true"

- "traefik.http.routers.test-secure.tls.certresolver=cloudflare"

- "traefik.http.routers.test-secure.service=test"

- "traefik.http.services.test.loadbalancer.server.port=80"

networks:

traefik:

external: trueIf everything works, you should be able to head to test.domain.com and see the welcome page - congratulations you have broken out of the CGNAT!

Troubleshoting

If it’s not working as expected, add the following static configuration to traefik.yml:

accessLog:

filePath: "/logs/access.log"

format: json

bufferingSize: 0

fields:

headers:

defaultMode: keep # we want to see the headers passed through

names:

User-Agent: keep

Restart Traefik and execute the following command to watch the end of the access log:

$ tail -f /logs/access.log

Now head to test.domain.com. If the traffic has reached your Traefik instance, you should see a new entry with the RequestHost of test.domain.com. If you are not seeing this, then connections are not being properly forwarded to your local server from the VPS.

Closing Thoughts

Although this set up does work, it does have a fatal flaw - it doesn’t support IPv6 right now. Part of the reason is because my VPS has not been configured to have an IPv6 address so I think that is what needs to be changed to work.